My research interests include computer vision and machine learning, where I lead a young and energetic research team that has published more than 100 papers in related top peer-review conferences and journals (e.g. CVPR, NeurIPS, TPAMI, TIP etc). I was the founding Chair for IEEE Computational Intelligence Society, Malaysia chapter.

Also currently, I serve as the Associate Editor of Pattern Recognition (Elsevier), and have co-organized several conferences/workshops/tutorials/challenges related to computer vision/machine learning. I was the recipient of Top Research Scientists Malaysia (TRSM) in 2022, Young Scientists Network Academy of Sciences Malaysia (YSN-ASM) in 2015 and Hitachi Research Fellowship in 2013. Besides that, I am also a senior member (IEEE), Professional Engineer (BEM) and Chartered Engineer (IET).

During 2020-2022, I was seconded to the Ministry of Science, Technology and Innovation (MOSTI) as the Lead of PICC Unit under COVID19 Immunisation Task Force (CITF), as well as the Undersecretary for Division of Data Strategic and Foresight.

Highlights:

08/2023: One(1) paper to appear in BMVC-2023. Please see Project Page.

06/2023: Two(2) papers to appear in ICIP-2023. Please see Project 1 and Project 2.

09/2022: One(1) paper to appear in AACL IJCNLP-2022. Please see Project Page.

professor at Universiti Malaya

Latest Works

Cycle-object consistency for image-to-image domain adaptation Star

C-T. Lin, J-L. Kew, C.S. Chan, S-H. Lai and C. Zach

Pattern Recognition (2023)

This work is focused on image2image domain adaptation (see pic beside) where we introduced an instance-aware GAN framework, AugGAN-Det, to jointly train a generator with an object detector (for image object style) and a discriminator (for global style).

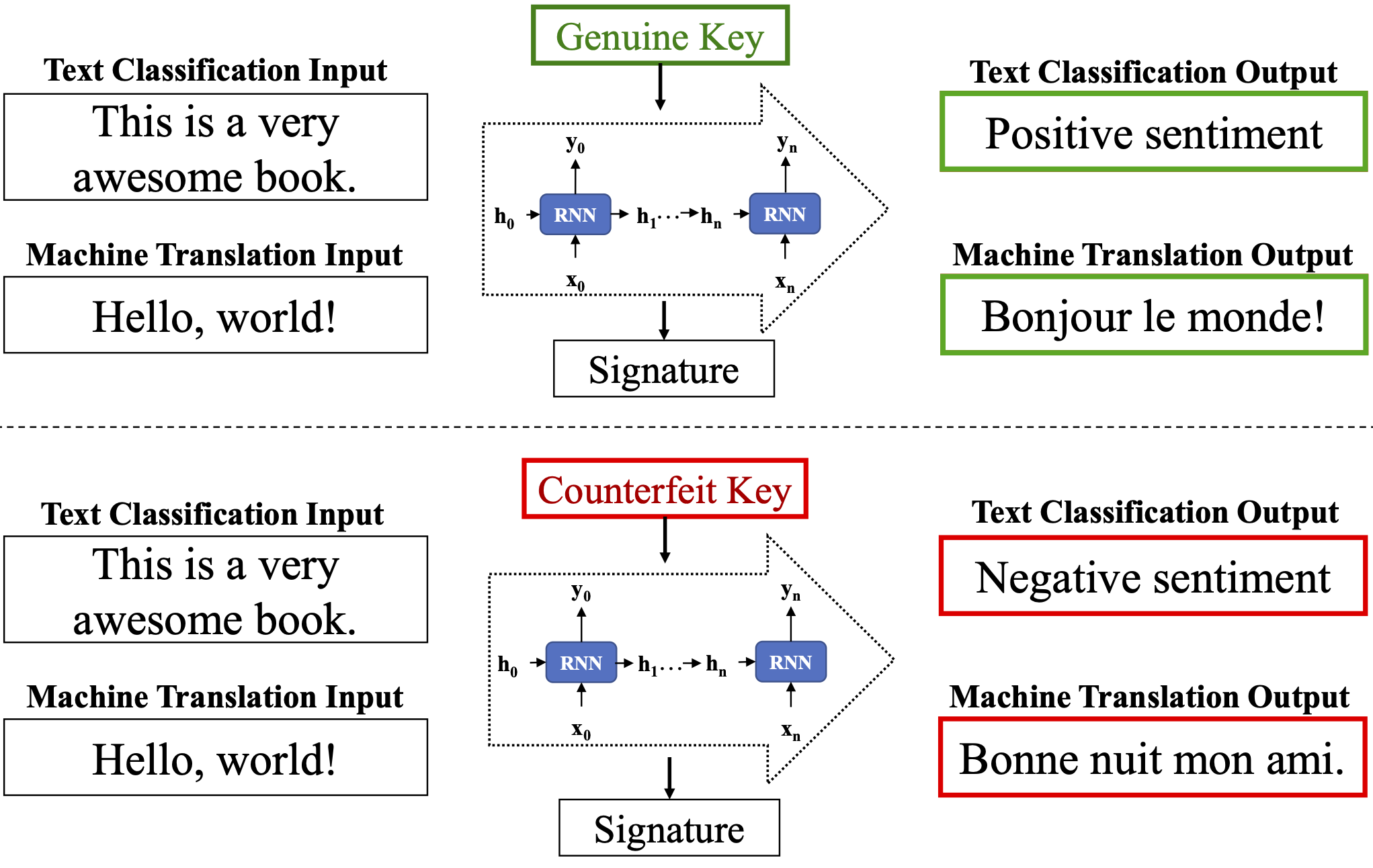

pdfAn Embarrassingly Simple Approach for Intellectual Property Rights Protection on Recurrent Neural Networks Star

Z.Q. Tan, H.S. Wong and C.S. Chan

AACL IJCNLP 2022 (oral long paper, acceptance rate: 87/554 ~ 15.7%)

This paper proposes a practical approach for the IPR protection on recurrent neural networks (RNN) with the Gatekeeper concept.

pdf codeDeepIPR: Deep Neural Network Ownership Verification with Passports Star

L. Fan, K.W. Ng, C.S. Chan and Q. Yang

IEEE Transactions on Pattern Analysis and Machine Intelligence (2022)

We propose novel passport-based DNN ownership verification schemes which are both robust to network modifications and resilient to ambiguity attacks. Extension of NeurIPS2019 (acceptance rate: 1428/6743 ~ 21.18%).

pdf poster(NeurIPS2019) code